Far from being concerned about the journalism jobs that may be lost with the emergence of the latest artificial intelligence (AI) tools, such as the Generative Pre-trained Transformer language models (GPT) in newsrooms, journalists should be more open to experimentation and to find new ways to apply those technologies to improve and potentialize their work.

That was one of the insights on which members of the panel “How can journalism incorporate AI, including generative tools like ChatGPT and Bard, to improve production and distribution of news?” agreed on the first day of the 23 International Symposium of Online Journalism (ISOJ), celebrated on April 14-15 at the University of Texas at Austin campus.

Aimee Rinehart, program manager of Local News AI at The Associated Press; Sisi Wei, editor-in-chief at The Markup; Jeremy Gilbert, Knight Chair of Digital Media Strategy in Northwestern University; and Sam Han, director of AI/ML and Zeus Technology at The Washington Post, made up the panel, which was moderated by Marc Lavallee, director of Technology, Product and Strategy in Journalism at the Knight Foundation.

Panelists agreed that transparency is going to be a key aspect in the use of AI-based generative tools in journalism. (Photo: Patricia Lim/Knight Center)

Many journalists are falling into the trap of asking the wrong questions about whether AI generative technologies are going to kill their jobs, when they should be trying to explore what that technology enables them to do better in their jobs, Gilbert said.

“Every time we try to say: ‘Is GPT a better reporter than I am? Is GPT a better writer than I am?,’ it's a false comparison that just doesn't actually really matter. And as we start to explore what these tools and systems enable us to do, we're actually going to have much more sophisticated conversations soon, as these things become normal,” he said. “That's what we should be talking about as we look at GPT: where does it enable us to do more of the things that we cannot do, serve more of the needs of our audience that we cannot serve? Rather than, ‘Is this going to replace the journalist in the local newsroom?’”

As has been the case in previous technological breakthroughs, it is hard to predict if generative AI tools will take journalism jobs away, and how that will be balanced with the jobs that are going to be created from those same tools. In that regard, Wei said she believes that new work opportunities will emerge before other jobs start to disappear.

The journalist said that the introduction of generative AI tools doesn’t mean that journalism as we know it is going to completely change. Rather, it is a question of thinking how those tools can actually help journalists do what they never thought was possible.

“Every single job that I have had in journalism for the last 10 to 15 years has been a job that didn't exist 10 to 15 years before,” she said. “What the large language models are doing, or even the visual models, is something just much faster than we were used to. It's about that investment ... That's where I think that the more creative we are and the more we try to do different things, the more naturally those jobs will start to create themselves.”

Rinehart said that today is a good moment for newsrooms to jump in the AI language models and experiment how to take more advantage of them. That’s especially the case for the small newsrooms, since the prices to acquire those technologies are getting cheaper, as more companies are joining the race to develop better and more efficient models.

“I feel like it [AI generative technology] has never been more accessible to local newsrooms, which is why I'm really excited for them, the 3-5 person newsroom, the ones who are really struggling sharing basic information in the community,” she said. “And frankly, it's a bargain right now. There is an arms race between these six companies that are dealing with it and they're lowering the prices, so I think, again, for the next year or two, local newsrooms stand to really come out the winners on this, because they're able to experiment.”

Rinehart mentioned that the best uses of AI large language models in local newsrooms nowadays include the coverage of city hall meetings, police blotter items, school lunch menus and TV listings, which are topics that are useful for the communities and that keep people subscribing to local media outlets.

Rinehart also talked about how newsrooms are currently using ChatGPT, the AI-driven natural language processing tool developed by the AI research company OpenAI. The tool was released in November 2022 and it has caused controversy in several countries over data concerns and inaccuracy claims. Among the most common uses of ChatGPT in newsrooms, Rinehart mentioned generating summaries, creating outlines, adding hashtags, headline brainstorming and rapid construction of HTML.

But big newsrooms are also taking advantage of tools like ChatGPT. Sam Han described how the OpenAI's language processing model has provided extra automation to The Washington Post’s own chatbot, especially in the coverage of elections. The Post’s software engineers designed a routing process in which ChatGPT categorizes the users’ questions, generates queries to The Post’s systems, and then the newspaper responds with journalistic information from its databases in real-time.

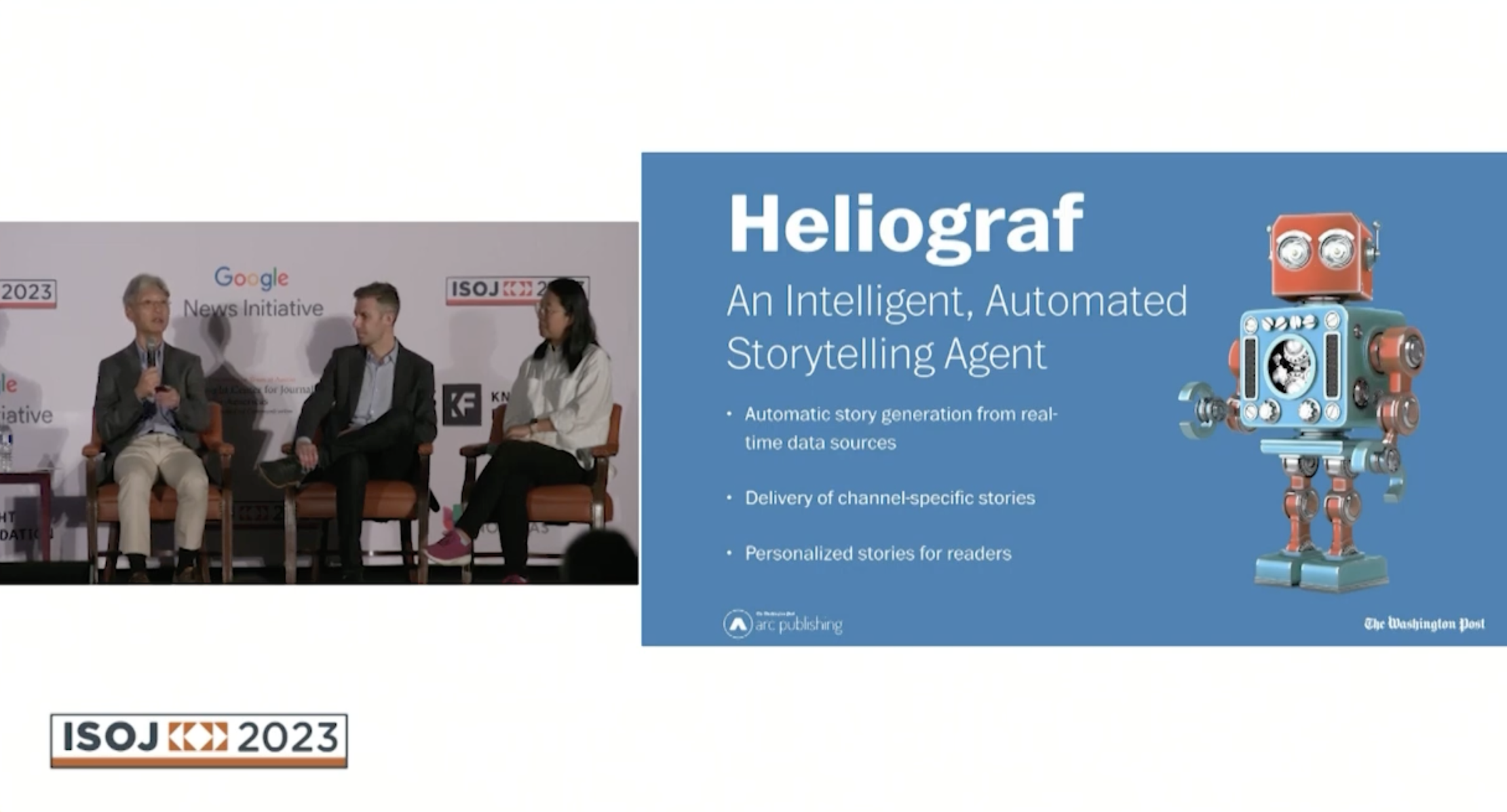

Heliograf is the Washington Post’s “robot reporter” launched in 2016, designed to enhance storytelling, mainly for data-driven coverage of major news events. (Photo: Screenshot of YouTube livestream)

This data then is crafted into a natural language answer by ChatGPT and delivered to the user. In that way, the tool delivers an answer from an authoritative source, unlike the regular ChatGPT website, whose sources are often questionable.

“We are curious to understand what the future of newsrooms look like, where an AI agent can help reporters gather information, analyze it, and provide suggestions. And we also want to explore what does storytelling mean in a world where users can ask questions or have a conversation with an AI chatbot for information,” Han said. “While we're trying to work to answer these questions, we also want to encourage innovation using AI, such that we can position ourselves as the leader in using AI for storytelling personalization, and potentially content creation. ... And while we are doing all this, we want to make sure that we keep the journalistic integrity and standards in mind.”

Han reminded the audience about Heliograf, the first Washington Post’s “robot reporter” launched in 2016. The AI-based tool, which was designed to enhance storytelling, mainly for data-driven coverage of major news events, was introduced during the Rio Olympics to assist journalists with reporting the results of medal events.

However, Han said that Heliograf has its limitations. One of them is the difficulty to open up the tool as a free format question chatbot, in which readers could ask questions or request pieces of content. Han said that understanding intent in users' questions is a very difficult task, in which ChatGPT has been of great help. In that sense, Han said, ChatGPT has come to The Washington Post to provide the best of both worlds: automation with accuracy and reliable sources.

While in several countries and institutions there are conversations about banning tools like ChatGPT, the panel also addressed the ethical concerns about the use of those technologies in newsrooms and journalism schools. The experts agreed that, in academia, professors should think more about assignments that students cannot complete with generative AI tools and instead, push meaningful training on skills such as reporting, writing, and editing, just as elementary teachers still teach arithmetic even though they are aware that there are calculators.

Wei added that teaching critical thinking in journalism schools is another way to push students to develop meaningful skills that won’t compete with AI technology in newsrooms. She suggested using ChatGPT, for example, to generate articles, and have the students edit them so they transform that material into real journalism stories by doing additional reporting and using fact-checking skills.

“I think it's great to teach journalism students how to edit, even though their job is not going to be editor out the gate, but through active editing they really interrogate every sentence, and it's a little different than fact-checking, but includes fact-checking,” she said. “And I've always just thought that journalism professors could use ChatGPT to generate articles on different topics that each individual student is interested in, and then assign them to edit that piece of work into an actually good piece of journalism.”

While generative AI tools can be helpful in the reporting stage of the journalism cycle, their real potential is in the writing part of it. Gilbert said that the large language models don't as easily mimic the reporting as they mimic the output of news stories. Therefore, journalism schools should worry more about teaching good reporting skills than about banning the use of AI technologies.

“A reporter is not automatically a writer, and these large language models are much better at generating things that look like writing than they are at doing meaningful reporting right now,” he said. “One of the things we need to train young journalists to do, people starting their careers, is to think about how can we ask questions that lead us in interesting directions, and then use large language models to tell those stories in all kinds of different ways. ... I'm not saying we don't need to teach writing at all, but if we have assignments that ChatGPT does just as well as our students, those assignments are no good.”

Panelists also agreed that transparency is going to be a key aspect in the use of AI-based generative tools in journalism. That includes letting readers know when a piece of information has been wholly or partly generated by an AI tool, and having human supervision in all AI-related tasks in the newsroom.

When asked by an attendee, Han mentioned that The Washington Post has put in place guidelines on that regard, saying that if anything is created by AI, there must be a human in the loop to make sure that the content is reviewed before it is published.

“I think there are a few efforts on this front. One I'll mention is the Partnership on AI, which involves about 100+ organizations that recently published a synthetic media framework for creators and publishers to try to start to lay out some of those guidelines, not just for journalism but really for all media as well,” Lavallee said.