By Hanaa' Tameez at the Nieman Journalism Lab

In the 13 months since OpenAI launched ChatGPT, news organizations around the world have been experimenting with the technology in hopes of improving their news products and production processes — covering public meetings, strengthening investigative capabilities, powering translations.

With all of its flaws — including the ability to produce false information at scale and generate plain old bad writing — newsrooms are finding ways to make the technology work for them. In Brazil, one outlet has been integrating the OpenAI API with its existing journalism to produce a conversational Q&A chatbot. Meet FátimaGPT.

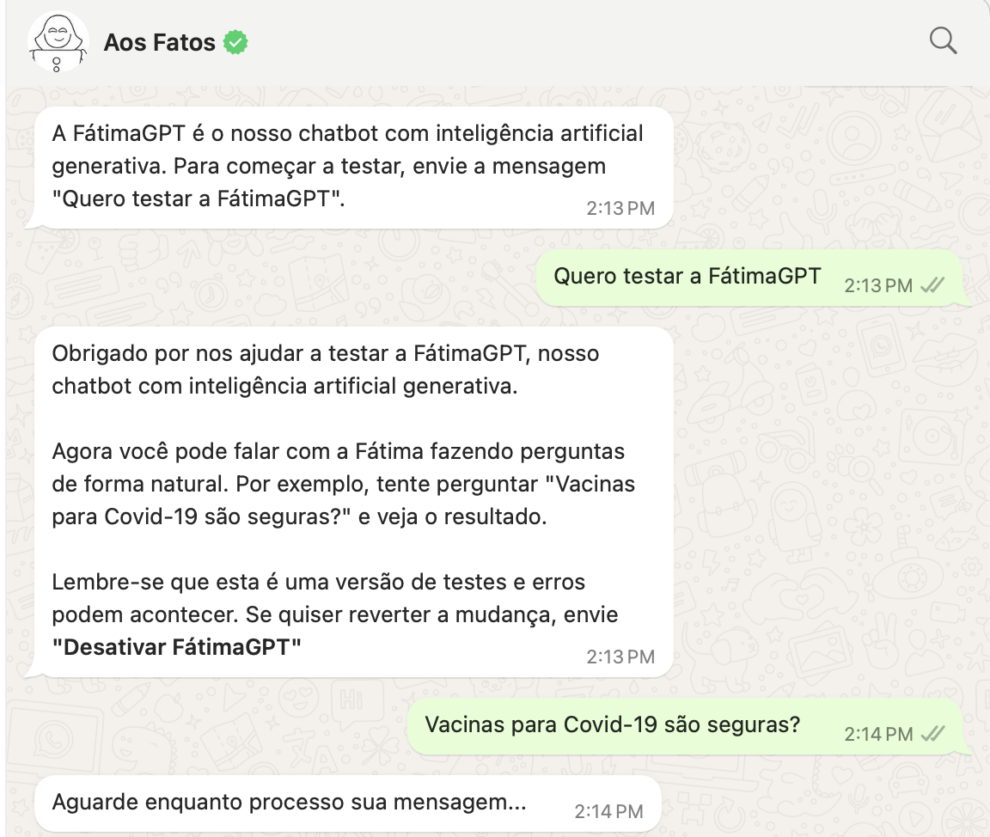

FátimaGPT is the latest iteration of Fátima, a fact-checking bot by Aos Fatos on WhatsApp, Telegram, and Twitter. Aos Fatos first launched Fátima as a chatbot in 2019. Aos Fatos (“The Facts” in Portuguese) is a Brazilian investigative news outlet that focuses on fact-checking and disinformation.

FátimaGPT is the latest iteration of Fátima, a fact-checking bot by Aos Fatos on WhatsApp, Telegram, and Twitter. Aos Fatos first launched Fátima as a chatbot in 2019. Aos Fatos (“The Facts” in Portuguese) is a Brazilian investigative news outlet that focuses on fact-checking and disinformation.

Bruno Fávero, Aos Fatos’ director of innovation, said that although the chatbot had over 75,000 users across platforms, it was limited in function. When users asked it a question, the bot would search Aos Fatos’s archives and use keyword comparison to return a (hopefully) relevant URL.

Fávero said that when OpenAI launched ChatGPT, he and his team started thinking about how they could use language learning models in their work. “Fátima was the obvious candidate for that,” he said.

“AI is taking the world by storm, and it’s important for journalists to understand the ways it can be harmful and to try to educate the public on how bad actors may misuse AI,” Fávero said. “I think it’s also important for us to explore how it can be used to create tools that are useful for the public and that helps bring them reliable information.”

This past November, Aos Fatos launched the upgraded FátimaGPT, which pairs a language learning model with Aos Fato’s archives to give users a clear answer to their question with source lists of URLs. It’s available to use on WhatsApp, Telegram, and the web. In its first few weeks of beta, Fávero said that 94% of the answers analyzed were “adequate,” while 6% were “insufficient,” meaning the answer was in the database but FátimaGPT didn’t provide it. There were no factual mistakes in any of the results, he said.

I asked FátimaGPT through WhatsApp if COVID-19 vaccines are safe and it returned a thorough answer saying yes, along with a source list. On the other hand, I asked FátimaGPT for the lyrics to a Brazilian song I like and it answered, “Wow, I still don’t know how to answer that. I will take note of your message and forward it internally as I am still learning.”

Aos Fatos was concerned at first about implementing this sort of technology, particularly because of “hallucinations,” where ChatGPT presents false information as true. Aos Fatos is using a technique called retrieval-augmented generation, which links the language learning model to a specific, reliable database to pull information from. In this case, the database is all of Aos Fatos’s journalism.

“If a user asks us, for instance, if elections in Brazil are safe and reliable, then we do a search in our database of fact-checks and articles,” Fávero explained. “Then we extract the most relevant paragraphs that may help answer this question. We put that in a prompt as context to the OpenAI API and [it] almost always gives a relevant answer. Instead of giving a list of URLs that the user can access — which requires more work for the user — we can answer the question they asked.”

Aos Fatos has been experimenting with AI for years. Fátima is an audience-facing product, but Aos Fatos has also used AI to build the subscription audio transcription tool Escriba for journalists. Fávero said the idea came from the fact that journalists in his own newsroom would manually transcribe their interviews because it was hard to find a tool that transcribed Portuguese well. In 2019, Aos Fatos also launched Radar, a tool that uses algorithms to real-time monitor disinformation campaigns on different social media platforms.

Other newsrooms in Brazil are also using artificial intelligence in interesting ways. In October 2023, investigative outlet Agência Pública started using text-to-speech technology to read stories aloud to users. It uses AI to develop the story narrations in the voice of journalist Mariana Simões, who has hosted podcasts for Agência Pública. Núcleo, an investigative news outlet that covers the impacts of social media and AI, developed Legislatech, a tool to monitor and understand government documents.

Bruno Fávero, director of innovation at Aos Fatos. (Photo: Courtesy)

The use of artificial intelligence in Brazilian newsrooms is particularly interesting as trust in news in the country continues to decline. A 2023 study from KPMG found that 86% of Brazilians believe artificial intelligence is reliable, and 56% are willing to trust the technology.

Fávero said that one of the interesting trends in how people are using FátimaGPT is trying to test its potential biases. Users will often ask a question, for example, about the current president Luiz Inácio Lula da Silva, and then ask the same question about his political rival, former president Jair Bolsonaro. Or, users will ask one question about Israel and then ask the same question about Palestine to look for bias. The next step is developing FátimaGPT to accept YouTube links so that it can extract a video’s subtitles and fact-check the content against Aos Fatos’s journalism.

FátimaGPT’s results can only be as good as Aos Fatos’s existing coverage, which can be a challenge when users ask about a topic that hasn’t gotten much coverage. To get around that, Fávero’s team programmed FátimaGPT to provide the dates for the information published that it shares. This way users know that the information they’re getting may be outdated.

“If you ask something about, [for instance], how many people were killed in the most recent Israeli-Palestinian conflict, it’s something that we were covering, but we’re [not] covering it that frequently,” Fávero said. “We try to compensate that by training [FátimaGPT] to be transparent with the user and providing as much context as possible.”