A pair of global initiatives by journalism promotion organizations launched dual initiatives that link the work of journalists with the use of artificial intelligence.

One of them seeks to have journalists monitor the impact of artificial intelligence on people's lives through research, while the other brings together media from several countries and sets them to work on projects that enhance the work of journalists through algorithms and automation tools.

In both initiatives there is an important presence of Latin American professionals who will boost the presence of both the region and the Spanish and Portuguese languages, when it comes to journalism and artificial intelligence in the world.

As part of its mission to boost coverage of under-researched stories of global importance, the Pulitzer Center launched the AI Accountability Network earlier this year. This initiative aims to support journalistic investigations that address the use of artificial intelligence by governments and corporations and to create a global network of journalists who learn together and share knowledge on this topic.

In June, the organization introduced the 10 journalists who will make up the first generation of fellows in its network, who were chosen from various regions of the world. Representing Latin America are journalist Karen Naundorf and photojournalist Sarah Pabst, originally from Germany but who have been practicing journalism in Argentina for several years.

The Pulitzer Center's AI Accountability Network aims to support journalistic investigations that address the use of artificial intelligence by governments and corporations. (Photo: Pulitzer Center)

"We saw that there are very few journalists who are holding accountable algorithms that make decisions, impacting people's lives," Boyoung Lim, manager of the Pulitzer Center's artificial intelligence network, told LatAm Journalism Review (LJR). "We thought that this should be something that we can step in and build community and capacity and also hopefully collaboration among journalists to report on the impacts of AI and algorithm technologies in people's lives and communities as a whole."

Naundorf is a correspondent in South America for the Swiss network SRF and her work has been published in media such as Revista Anfibia, The Washington Post and Der Spiegel. Pabst is a member of Ayün Fotógrafas, a collective of eight women photographers who work on issues such as identity, human rights, women, and the environment in Latin America. Both published last year the special "Femicide Perpetrators in Uniform: System Errors," an investigative feature story on victims of femicide at the hands of police officers in Argentina, also with the support of the Pulitzer Center.

Naundorf and Pabst will work together in the AI Accountability Network with a pitch on a written and visual journalism project on the use of automation technologies in Argentina and Brazil.

"They will combine their specialties to reach even a wider audience by visualizing some of the stories they will produce with Sarah's photography and other innovative visual effects," Lim said. "I'm super excited about this project because of the visual elements, how can photojournalists cover this type of AI of stories that seem very technical and abstract."

In the project pitch for their AI Accountability Fellowship application, the journalists raised critical questions about how journalists should cover artificial intelligence tools that affect the lives of communities around the world, especially in Latin America.

"We live in an unequal continent. And we know that there is a risk of human biases being reproduced by AI systems, which could then reinforce social inequality," Naundorf told LJR. "AI often seems to be a topic that does not concern the public or over which it has no influence. [...] While there are citizens, universities and NGO initiatives that question the use of AI, and also seek judicial redress, these receive relatively little media coverage. For this very reason, we believe it's an important time to look to the continent right now, underlining the need for regulations and public debate."

The grantees will receive up to US $20,000 to carry out their projects for the remainder of 2022. In addition, they will receive mentoring and training sessions to strengthen their stories.

The stories are expected to be published between November of this year and January 2023. But, most of all, the Pulitzer Center is hoping collaborative projects will emerge among members of the first program cohort who will continue to explore coverage of artificial intelligence, even after the initiative ends.

"We're already seeing some potential collaborations among the fellows, it's very exciting. I can't talk a lot about the details, but there’s already some overlaps that we see among their projects and they are already talking about collaborations, so it's going to be very interesting to see in a few months from now," Lim said.

But why is it important for journalists to monitor the use of artificial intelligence? The Pulitzer Center believes that the application of these technologies in areas such as public safety, social programs, border control and human resources, among many others, can alter the dynamics of power, as these technologies are capable of making decisions that sometimes benefit the most privileged segments of society. Hence the need for accountability.

It is not a matter of persecuting anyone or demanding explanations, Lim said, but rather of journalists and eventually the general public being able to better understand these technologies, so they have the tools to question their use in vital areas of society.

"It's about looking at the technologies that are present in our daily lives with a lens of equity and accountability," she said. "Hopefully this first cohort is the beginning of a growing community of journalists around the world who agree with us that we should all be reporting on artificial intelligence. Also, that we need to be more aware of how these technologies are transforming and influencing our lives and sometimes disproportionately affecting minorities and marginalized groups."

After two years of carrying out the Collab Challenge initiative — an experiment that brought together journalists from different parts of the world to explore innovative solutions to improve journalism through artificial intelligence — the JournalismAI organization introduced the first cohort of its JournalismAI fellows in June 2022.

This is a revamped version of the Collab Challenges in which 46 journalists and media technicians from 16 countries will work in ten teams for six months developing projects that explore how the responsible use of artificial intelligence can contribute to building more sustainable, inclusive and independent journalism around the world.

A total of 46 journalists and media technicians from 16 countries will work in ten teams for six months as part of the first Journalism AI Fellowship. (Photo: JournalismAI)

Unlike previous challenges, the JournalismAI Fellowship has the distinctive feature of inviting not only journalists but also technical media professionals, who will team up with partners from other media to work on the same project.

Among the 46 selected participants are representatives from five Latin American journalism organizations. Schirlei Alves and Reinaldo Chaves, from Abraji (Brazil), will team up with Fernanda Aguirre and Gibrán Mena, from Data Crítica (Mexico); Lucila Pinto and Nicolás Russo, from Grupo Octubre (Argentina), will work in the same team as Sara Campos and Eduardo Ayala, from El Surti (Paraguay); and Juliana Fregoso and Matías Contreras, from Infobae (Argentina), will pair up in another team.

"The ten Fellowship teams continue the JournalismAI tradition of fostering cross-border and interdisciplinary collaboration, with data scientists, reporters, product managers, researchers and software engineers working together with peers from news organizations in different continents – including collaborations between legacy brands and digital media," the organization, based at the London School of Economics and Political Science, said in a statement.

The teams' projects recommend the application of artificial intelligence in tasks such as monitoring hate speech, detecting misleading content, automatic image analysis and handling large amounts of data, among others. With their projects, the Latin American participants will seek to promote the use of these technologies in news outlets of the Global South.

The El Surti team and Grupo Octubre agreed to develop a tool that identifies, classifies and describes images through the use of computer vision. The tool, initially named Image2Text, is intended to recognize objects and people in video, still images and infographics and describe them in both English and Spanish.

Given the visual nature of the news outlet El Surti, the tool could help its content be consumed by a wider audience, including the visually impaired, and perform better in search engines.

"The main thing that makes our content not rank well in [Google] searches is because these are images, illustrations, and these are also illustrations that are sometimes super editorial, so they are difficult for robots to read," Sara Campos, a team ember and product editor of the Paraguayan media outlet, told LJR. "We began to see that there was also a need on the part of our colleagues to improve, above all, SEO [search engine optimization], organic and natural distribution [of their content]."

The Image2Text team is aware that six months is a short time in which to develop their tool one hundred percent. However, they have challenged themselves to deliver at the end of the program at least one prototype that has been proven effective in at least one research case.

"We were talking recently and said 'let's not focus on the interface, let's focus on making it work for now for a specific case,'" Campos said. "Maybe there's not going to be an interface, but there's going to be a code repository, a library [on an application code storage portal file] open GitHub. [...] What we're doing now is just figuring out in which case it's going to work."

Grupo Octubre and GMA News Online, the Philippine news outlet that rounds-up the Image2Text team, had similar proposals for image recognition tools. All three media outlets agreed they had encountered biases while experimenting with these artificial intelligence tools.

In the case of the Argentine news outlet, an image recognition tool they had previously developed (as part of Google News Initiative's Innovation Challenge 2021), called Visión Latina, sought to identify important figures in the Latinx world through images and videos, in Spanish.

However, they found that computer vision is not very efficient when trying to identify figures from the Global South, unlike figures from the northern hemisphere.

"This is an enormous bias," Campos said. "So, our proposal to create a library of [visual] languages from the south for the south is also a political stance against these models. We'll see if we can pull it off, because it's a challenge."

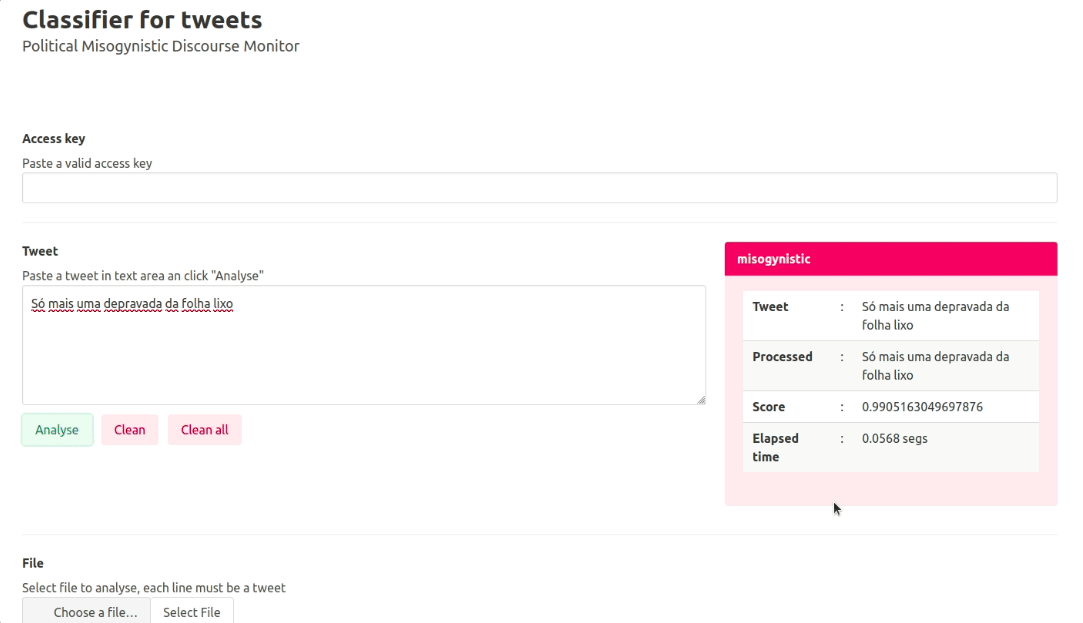

The Misogynist Political Discourse Monitor is a tool developed by journalists from Latin American newsrooms as part of the previous JournalismAI initiative. (Photo: Courtesy).

Infobae representatives found a similar bias, in this case the fact that English is still the language in which artificial intelligence works best. The team involving the Argentina-based media outlet, which also includes SkyNews (UK) and Il Sole 24 Ore (Italy), plans to develop a tool that works in English, Spanish and Italian. Its aim will be to track topics, brands and products shared by influencers on social networks, as well as to detect potentially harmful or misleading content.

"We indeed have a long way to go to get to that point where artificial intelligence can still speak other languages perfectly," Juliana Fregoso, project manager for artificial intelligence at Infobae, told LJR. "I think language is also a barrier. We in Latin America speak Spanish or Portuguese, and there are very few journalists who actually speak English, so that is an impediment [to the use of artificial intelligence in journalism]."

Thanks to its members' profiles, this team intends to complement its project with financial journalism and data journalism methodologies. As for the type of technology, they intend to use natural language processing (NLP) and massive database construction tools, such as Python, a programming language commonly used by data journalists.

Their idea is to feed huge databases from social media accounts to be analyzed, so the tool extracts what is published on those accounts, processes it and gives it a score according to how misleading or harmful it is. The tool will be able to help journalists investigate stories about misleading advertising on digital platforms.

"We will have to feed in files, which we will most likely put together in Python, what it is that we want to extract from those accounts and how they will alert us to find out when products are being advertised or language is being used that could be potentially misleading," Fregoso explained.

For its part, the team project integrated by Abraji and Data Crítica is the development of Attack Detector, a linguistic model to detect hate speech in social networks in Spanish and Portuguese, aimed mainly towards journalists and environmental activists.

Data Crítica had previously participated in JournalismAI's Collab Challenge in 2021 with the development of the Misogynist Political Speech Monitor. This is a tool that, through a trained natural language processing model, detects hate speech against women on the internet in Spanish and Portuguese.

The JournalismAI Fellowship, which is financially supported by the Google News Initiative and with team consulting provided by the Knight Lab at the Northwestern University, aim for these initiatives, far from replacing the work of journalists, to add value and enhance the work by automating repetitive tasks that would normally take a lot of time and effort when done by human beings.

"I think we can begin to work on a hybrid artificial intelligence, in which one part is effectively done by technology and the rest is done by journalists," Fregoso said. "I don't see the use of artificial intelligence in journalism as a threat that it will take away our jobs. I see it as an opportunity to reinvent journalism."