A video in which the voice of Mexican journalist Carlos Loret de Mola is heard calling into question an interurban park project in the state of Querétaro circulated on social networks at the end of 2023. The video gives the impression it’s part of a report like those the journalist presents on his program “Loret” on the Latinus digital network.

However, a few days later, Loret de Mola himself denied the authenticity of the video through his social media accounts and denied that it was his voice. Some media outlets reported that it was a video generated with artificial intelligence (AI) and suggested that it could have been part of a strategy to discredit the municipal president of the city of Querétaro, who presented the park project discussed in the video.

Similar to the case of Loret de Mola, in Mexico videos have circulated in recent months that use the voice of personalities such as then-presidential candidate for the ruling party Claudia Sheinbaum, telecommunications magnate Carlos Slim or Argentine soccer player Lionel Messi to promote products or applications that promise to generate income easily.

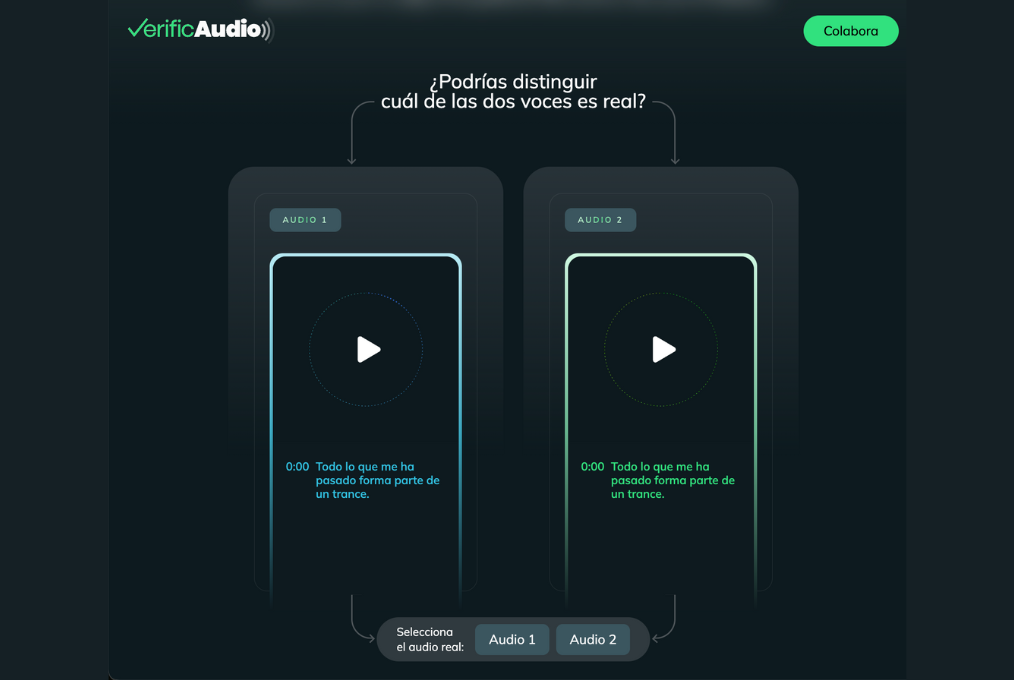

VerificAudio consists of two Natural Language Processing (NLP) models, one identifying and the other comparative. (Photo: Screenshot from VerificAudio website)

Carlos Urdiales, director of station W Radio in Mexico, where Loret de Mola heads the midday news program, believes that the technology that made the video with the journalist's cloned voice possible could be extremely dangerous this year in Mexico, considering the country just celebrated the largest election in its history.

For this reason, he said, his station embraced the VerificAudio project from the beginning. It’s an AI tool that seeks to combat disinformation in audio content. The tool was developed by Spanish media group PRISA, of which W Radio and almost 30 other radio station brands in Spain and Latin America are a part.

“Artificial intelligence as a tool we know poses challenges to us as journalists, due to deep fakes, due to the mega electoral year at a global level,” Urdiales told LatAm Journalism Review (LJR). “Having the correct use of artificial intelligence available [...] is of enormous value, especially in terms of our responsibility to our listeners.”

VerificAudio was presented in March 2024, after which it underwent a trial period of almost three months, during which the AI models that make it up continued to be trained. Additionally, teams from stations Cadena SER (Spain), ADN (Chile), Caracol (Colombia) and W Radio (Mexico), from PRISA were trained to integrate the tool with their fact-checking protocols. Although VerificAudio has so far been available only to PRISA stations, Ana Ormaechea, digital product director at PRISA Radio, said the company is already considering opening it to other journalists.

“The tool is already at a high level of maturity and we are willing to open collaboration with other media that are interested,” Ormaechea told LJR.

The rapid improvement of voice cloning techniques using AI and their misuse are two of the most worrying changes that the media ecosystem in Spanish-speaking countries is currently experiencing, Ormaechea said.

Therefore, for PRISA Radio, VerificAudio is a tool to combat the increasingly sophisticated deep fakes in audio and thereby safeguard the trust that its stations have managed to consolidate among their listeners over several decades.

“This year we are seeing more than half of the [global] population vote. We have important votes in Mexico, in the United States, and here [in Spain] we have the European ones. We know that whenever there is an important electoral period, unfortunately, there are situations of disinformation,” Ormaechea said. “We were very afraid that with this advance in technology and the application of AI to voice cloning, and this electoral situation, there will be a large increase in disinformation through audio in 2024.”

PRISA commissioned the development of VerificAudio to the Spanish technology company Minsait, which worked on it for about a year. The project had the support of the Google News Initiative, both in the development of the idea and in the funds for its execution.

Google News Initiative decided to support the project because it aligns with two of the pillars that the technology company currently seeks to promote: the development of AI and the fight against disinformation.

“VerificAudio joins a group of key tools that journalists can use when verifying information. This is a clear example of how Artificial Intelligence can help journalism and information in the right way,” Haydée Bagú, News Partner Manager of Google Latin America, told LJR.

VerificAudio consists of two Natural Language Processing (NLP) models, one identifying and the other comparative. The identifying model consists of a machine learning model that compares the suspicious material with audio from the PRISA station files based on a set of predetermined indicators, and provides a percentage of authenticity, Ormaechea explained.

The voice of president-elect of Mexico, Claudia Sheinbaum, was manipulated in videos during her electoral campaign. (Photo: Screenshot of the Morena party's Facebook page)

The comparative model consists of an open-source model known as an transformer neural network, which was subjected to a fine-tuning process to adapt it to Spanish. According to Google, a transformer is an NLP model that transforms an input sequence into an output sequence, for which it “learns” context elements and the relationships between the components of said sequences.

VerificAudio's transformer model converts each piece of audio into a series of vectors that represent the particularities of the voice, such as timbre, intonation, and speech patterns, and then compares them with those of pre-existing audio to detect signs of cloning.

This double-checking protocol seeks to increase the precision of the tool and minimize the number of false results. Both components have been trained by PRISA with audio of real voices and cloned voices, said Ormaechea, who added that the company's priority is to continue training VerificAudio with the support of subsidiary stations in Spain and Latin America, so that the tool is capable to recognize and analyze voices with different Spanish accents.

“The bad guys move very fast. Every day we wake up with a new advance. We need to continue training the models on a daily basis so that they can keep up with the effectiveness of this disinformation. We increasingly see that these deep fakes are of better quality, that they are being distributed very well,” Ormaechea said.

After its launch in March, PRISA launched a website with an open VerificAudio interface to present the tool to the general public and so that users could send suspicious audio for analysis. Meanwhile, the tool was subjected to a training process of its models to refine its results and “accustom it” to the accents of each country in which PRISA is located, before putting it into use in its newsrooms.

Ormaechea said that the company created a transversal verification committee, made up of members of the news staff from each of the PRISA stations. This committee is responsible for analyzing the audio sent by the public through the VerificAudio website and also uses this audio to continue with the training of the models.

Those staff members are the ones who will eventually be in charge of the tool at each station. At W Radio, two members of the newsroom were appointed, one from the digital team and another from news production, Emmanuel Manzano, director of digital media at W Radio, told LJR.

“After this training or learning curve on the part of the newsroom, in the coming weeks they will begin to use it practically,” said Manzano, who hoped that the Mexican station would begin using the tools in early June.

VerificAudio is not intended to replace newsroom verification protocols, nor to validate or debunk audio content. PRISA's intention with the tool is that it provides an evaluation of the audio characteristics and a reliability percentage so that journalists can make a final decision under the fact-checking standards of their media outlets.

“That's why this committee exists [in each station], because it's not just about putting it [the suspicious audio] into the tool and having it validate it,” Manzano said. "You also have to give context to the audio itself, where it came from, what generated it, the validity of the information that is being heard in the audio... There is much more than just putting it into the tool, there is a whole process."

Journalist Carlos Loret de Mola is another victim of voice cloning. The station that broadcasts his newscast, W Radio, is one of those participating in the VerificAudio project. (Photo: Screenshot from W Radio's Facebook page)

Urdiales added that at W Radio they do not expect VerificAudio to replace journalists in content verification tasks and said that the context analysis carried out by journalists is essential to debunking deep fakes.

“VerificAudio provides this possibility of entering, processing and having firmer data and more certainty of that rigor of knowing that it is very likely that [a piece of content] is authentic or very likely that it is not, and making decisions based on that,” Urdiales said. “But at the end of this process of constructing informative journalistic content, the institutions and structures of the professionals on a news desk continue to have great weight.”

For the journalist, the tool arrived at a very opportune time to his newsroom, a few months before the presidential elections that took place on June 2 in Mexico. However, he said that, although during the electoral campaign period in that country there were some cases of audio with supposedly cloned voices of politicians, these were relatively easy to debunk and did not have a major effect on the course of the electoral process.

Ormaechea said that until the beginning of June, VerificAudio's public interface had received deep fakes from Venezuela and Mexico, mostly, but also from other countries such as the United States or Cuba.

“Our goal was to make [VerificAudio] very quickly to get it up and running internally and be able to continue testing and improving it before elections in Mexico,” Ormaechea said. "For us, the elections in Mexico, and I believe that those in the United States –because there will also be deep fakes in Spanish in the United States– would be the focus."

However, for Urdiales, what is coming in the near future is more worrying in terms of the level of sophistication of deep fakes and the impact they may have on the credibility of the news media.

“Every time you realize that there are audio recordings that you say 'my gosh, if it is already making me doubt, it means that the general public can buy it very easily,'” he said. “I am more concerned about what is coming, and the fact that we have this tool, I think it prepares us to be able to do more.”

Manzano added that VerificAudio has contributed to raising awareness among W Radio journalists about how much AI can do in journalism, both for good and for bad.

“This tool also has a great objective, not only to identify [manipulated content], but also to raise awareness among our newsroom and help them learn more about it,” Manzano said.